Identifies local relspenn discourse treebank pdtb prasad

TextTiling Segmentation Depth score:

Difference between position and adjacent peaks E.g., (ya1-ya2)+(ya3-ya2)

Evaluation

How about precision/recall/F-measure?

| 1 | N−k | |

|---|---|---|

| ∑ | ||

| = | N − k | ∑ |

| i=1 |

Text Coherence

Cohesion – repetition, etc – does not imply coherence Coherence relations:

Possible meaning relations between utts in discourse

Cohesion – repetition, etc – does not imply coherence

Coherence relations:

Text Coherence

Result: Infer state of S0 cause state in S1

The Tin Woodman was caught in the rain. His joints rusted.Explanation: Infer state in S1 causes state in S0 John hid Bill’s car keys. He was drunk.

Coherence Analysis

S1: John went to the bank to deposit his paycheck.

Coherence Analysis

S1: John went to the bank to deposit his paycheck.

Coherence Analysis

S1: John went to the bank to deposit his paycheck.

Rhetorical Structure Theory

Mann & Thompson (1987)

Assign coherence relations between spans

Create a representation over whole text => parse Discourse structure

RST trees

Fine-grained, hierarchical structure

Clause-based units

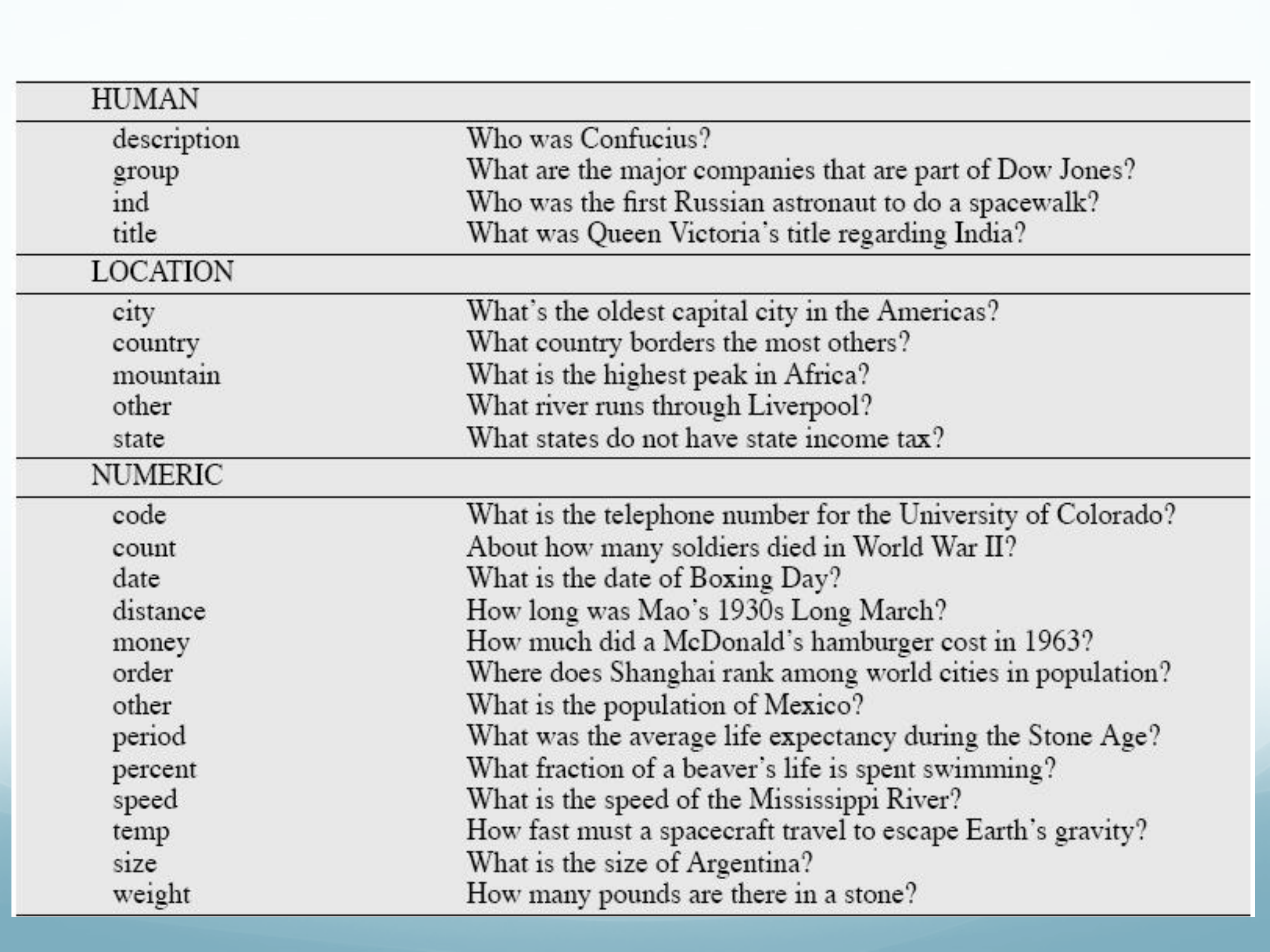

Penn Discourse Treebank PDTB (Prasad et al, 2008)

“Theory-neutral” discourse model

No stipulation of overall structure, identifies local rels Two types of annotation:

Explicit: triggered by lexical markers (‘but’) b/t spans Arg2: syntactically bound to discourse connective, ow Arg1

Implicit: Adjacent sentences assumed related

Arg1: first sentence in sequence

Senses/Relations:

Comparison, Contingency, Expansion, Temporal Broken down into finer-grained senses too

Basic Methodology

Identifying Relations

Ambiguity: cue multiple discourse relations

Because: CAUSE/EVIDENCE; But: CONTRAST/CONCESSION Sparsity:

Only 15-25% of relations marked by cues

Discourse structure modeling

Linear topic segmentation, RST or shallow discourse parsing Exploiting shallow and deep language processing

Roadmap

Wrap-up

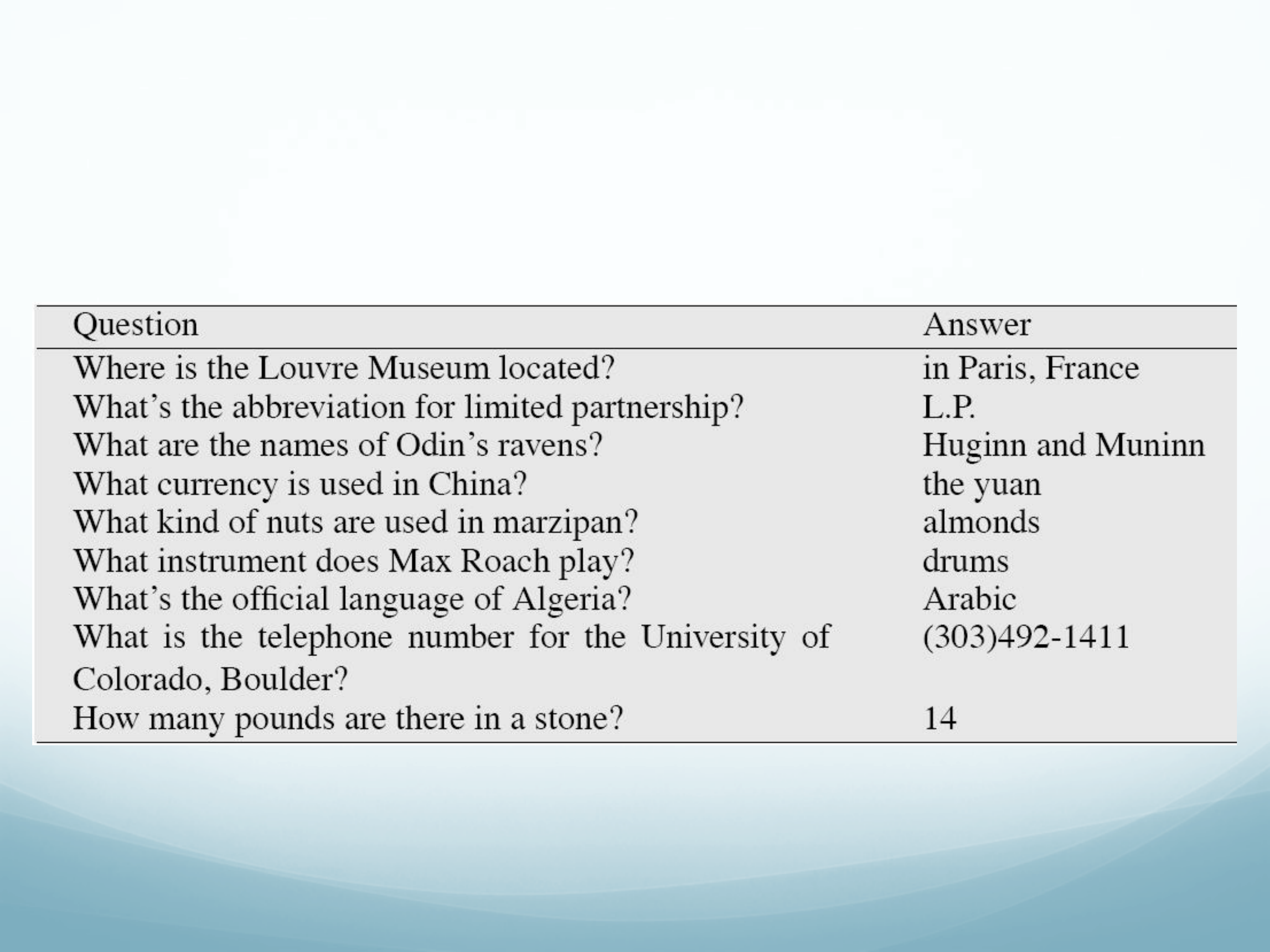

Why QA?

Grew out of information retrieval community

Who invented surf music?

What are the seven wonders of the world?

Short answer, possibly with supporting context

People ask questions on the web

Web logs:

Which English translation of the bible is used in official Catholic

liturgies? Who invented surf music?

What do search engines do with questions?

Backs off to keyword search

How well does this work?

Increasingly try to answer questions

Especially for wikipedia infobox types of info

The official Bible of the Catholic Church is the Vulgate, the Latin version of the …

The original Catholic Bible in English, pre-dating the King James Version (1611). It was translated from the Latin Vulgate, the Church's official Scripture text, by English

Search Engines & QA What is the total population of the ten largest capitals in the US?

Rank 1 snippet:

The table below lists the largest 50 cities in the United States …..

Search Engines and QA Search for exact question string

“Do I need a visa to go to Japan?”

Result: Exact match on Yahoo! AnswersFind ‘Best Answer’ and return following chunk

Search Engines and QA

Perspectives on QA

Reading comprehension (Hirschman et al, 2000---) Think SAT/GRE

Short text or article (usually middle school level)

Answer questions based on text

Also, ‘machine reading’

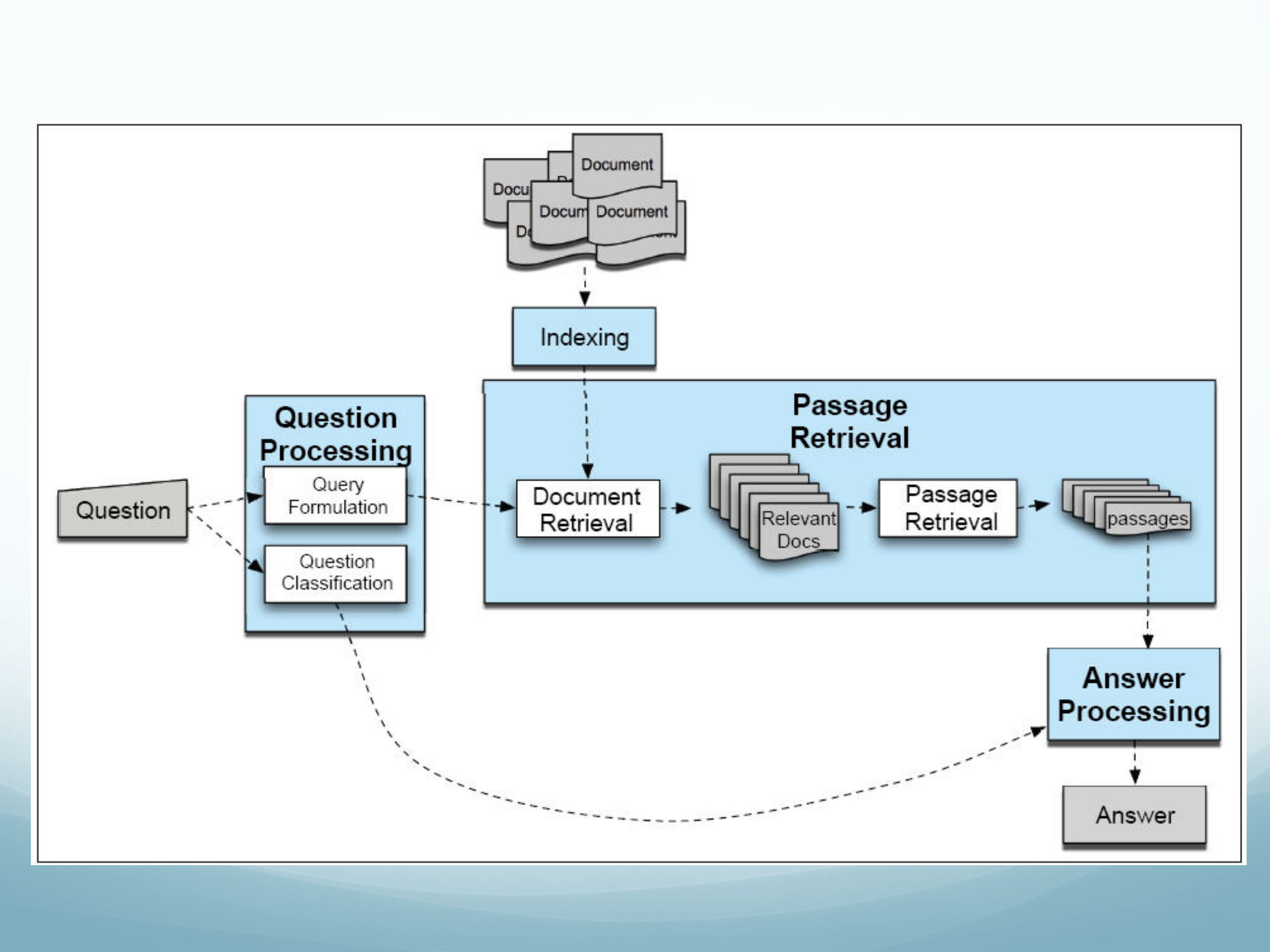

Question Answering (a la TREC)

Execute the following steps: Query formulation

Question classification

Passage retrieval

Answer processing

Evaluation

Query Processing

Query reformulation

Convert question to suitable form for IR

E.g. ‘stop structure’ removal:

Delete function words, q-words, even low content verbs Question classification

Answer type recognition

Who

Query reformulation

Convert question to suitable form for IR

E.g. ‘stop structure’ removal:

Delete function words, q-words, even low content verbsQuestion classification

Answer type recognition

Who à Person; What Canadian city à City What is surf music à

Train classifiers to recognize expected answer type Using POS, NE, words, synsets, hyper/hypo-nyms