Review the content the run file static void run myblob

AZ-300T06

Developing for the Cloud

Contents

| ■ | Module 0 Start Here . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | |||

|---|---|---|---|---|

| ■ | Welcome to Developing for the Cloud . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | |||

| Module 1 Module Developing Long-Running Tasks and Distributed Transactions . . . . . . . . . | ||||

| ■ | Implement large-scale, parallel, and high-performance apps by using batches . . . . . . . . . . . . . . . . . . | |||

| Implement resilient apps by using queues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . |

|

|||

| Implement code to address application events by using webhooks . . . . . . . . . . . . . . . . . . . . . . . . . . . |

|

|||

| Online Lab - Configuring a Message-Based Integration Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Review Questions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Module 2 Module Configuring a Message-Based Integration Architecture . . . . . . . . . . . . . . . | ||||

| ■ | Configure an app or service to send emails . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | |||

| Configure an event publish and subscribe model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . |

|

|||

|

||||

| Create and configure a notification hub . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . |

|

|||

| Create and configure a service bus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . |

|

|||

| Configuring apps and services with Microsoft Graph . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Review Questions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Module 3 Module Developing for Asynchronous Processing . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| ■ | Implement parallelism, multithreading, and processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | |||

|

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . |

|

||

| Implement interfaces for storage or data access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Implement appropriate asynchronous computing models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Review Questions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Module 4 Module Developing for Autoscaling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| ■ | Implement autoscaling rules and patterns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . |

|

||

| Implement code that addresses singleton application instances . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . |

|

|||

| Review Questions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Module 5 Module Developing Azure Cognitive Services Solutions . . . . . . . . . . . . . . . . . . . . . . |

|

|||

| Cognitive Services Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . |

|

|||

| Develop Solutions using Computer Vision . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Develop Solutions using Bing Web Search . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

| Develop Solutions using Custom Speech Service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . | ||||

Welcome to Developing for the Cloud. This course is part of a series of five courses to help students prepare for Microsoft’s Azure Solutions Architect technical certification exam AZ-300: Microsoft Azure Architect Technologies. These courses are designed for IT professionals and developers with experience and knowledge across various aspects of IT operations, including networking, virtualization, identity, security, business continuity, disaster recovery, data management, budgeting, and governance.

The outline for this course is as follows:

● Implementing resilient apps by using queues

As well as, implementing code to address application events by using webhooks. Implementing a web-hook gives an external resource a URL for an application. The external resource then issues an HTTP request to that URL whenever a change is made that requires the application to take an action.

●

Begin creating apps for Autoscaling●

Understand Azure Cognitive Services SolutionsHigh-performance computing (HPC)

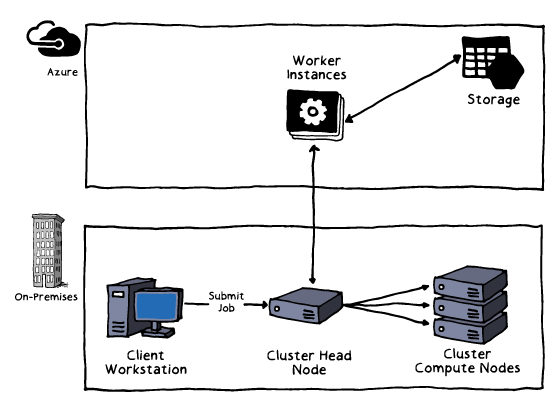

Traditionally, complex processing was something saved for universities and major research firms. A combination of cost, complexity, and accessibility served to keep many from pursuing potential gains for their organizations by processing large and complex simulations or models. Cloud platforms have democratized hardware so much that massive computing tasks are within the reach of hobbyist develop-ers and small and medium-sized enterprises.

| ED | 6 |

|

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

|

|

||

| compute nodes deployed in Azure VMs, managed by a Windows Server head node. With the latest | ||

|

||

|

||

| er’s perspective, RDMA is implemented in a way that makes it seem as if the machines are sharing | ||

|

||

|

||

| A8 and A9 VMs. These RDMA capabilities can boost the scalability and performance of certain MPI | ||

|

||

| Azure Batch is a service that manages VMs for large-scale parallel and HPC applications. Batch is a | ||

|

Scaling out parallel workloads

The Batch API can handle scaling out an intrinsically parallel workload, such as image rendering, on a pool of up to thousands of compute cores. Instead of having to set up a compute cluster or write code to queue and schedule your jobs and move the necessary input and output data, you automate the schedul-ing of large compute jobs and scale a pool of compute VMs up and down to run them. You can write client apps or front ends to run jobs and tasks on demand, on a schedule, or as part of a larger workflow managed by tools such as Azure Data Factory.

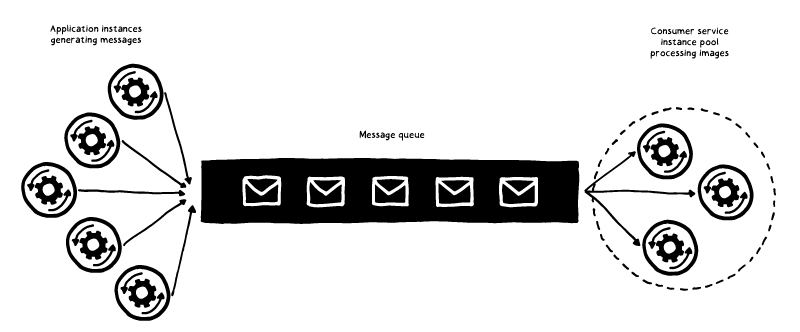

Use a message queue to implement the communication channel between the application and the instances of the consumer service. The application posts requests in the form of messages to the queue, and the consumer service instances receive messages from the queue and process them. This approach enables the same pool of consumer service instances to handle messages from any instance of the application. The figure illustrates using a message queue to distribute work to instances of a service.

To insert a message into an existing queue, first create a new CloudQueueMessage. Next, call the AddMessage method. A CloudQueueMessage can be created from either a string (in UTF-8 format) or a byte array. Here is code that creates a queue (if it doesn't exist):

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(CloudConfigu-

| ED | 10 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

|

||

|

||

|

||

| message assures that if your code fails to process a message due to a hardware or software failure, | ||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

var messages = new List<Message>();

for (int i = 0; i < 10; i++)

{

var message = new Message(Encoding.UTF8.GetBytes($"Message {i:00})}; messages.Add(message);

}await queueClient.SendBatchAsync(messages);

static async Task MethodToHandleNewMessage (Message message, Cancellation-Token token)

{

string messageString = Encoding.UTF8.GetString(message.Body);

Console.WriteLine($"Received message: {messageString}");

await queueClient.CompleteAsync(message.SystemProperties.LockToken); }You will notice that we need to call the CompleteAsync method of the queue client at the end of the message handler method. This ensures that the message is not received again. Alternatively, you can call AbandonAsync if you wish to stop handling the message and receive it again.

Implement code to address application events by using webhooks 13

"type": "http",

"direction": "out"

}

]

}{

"bindings": [

{

"type": "httpTrigger",

"direction": "in",

"webHookType": "github",

"name": "req"

},

{

"type": "http",

"direction": "out",

"name": "res"

}

],

"disabled": false

| ED | 14 |

|

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

| context.log('GitHub WebHook triggered!', data.comment.body); | ||

|

Online Lab - Configuring a Message-Based Integration Architecture 15

Scenario

Adatum has several web applications that process files uploaded in regular intervals to their on-premises file servers. Files sizes vary, but they can reach up to 100 MB. Adatum is considering migrating the applications to Azure App Service or Azure Functions-based apps and using Azure Storage to host uploaded files. You plan to test two scenarios:

In this lab, you will use Azure Storage Blob service to store files to be processed. A client just needs to drop the files to be shared into a designated Azure Blob container. In the first exercise, the files will be consumed directly by an Azure Function, leveraging its serverless nature. You will also take advantage of the Application Insights to provide instrumentation, facilitating monitoring and analyzing file processing. In the second exercise, you will use Event Grid to automatically generate a claim check message and send it to an Azure Storage queue. This allows a client application to poll the queue, get the message and then use the stored reference data to download the payload directly from Azure Blob Storage.

Online Lab - Configuring a Message-Based Integration Architecture 17

2. In the Azure portal, in the Microsoft Edge window, start a Bash session within the Cloud Shell.

● Storage account: a name of a new storage account

● File share: a name of a new file share

export LOCATION='<Azure_region>'

6. From the Cloud Shell pane, run the following to create a resource group that will host all resources that you will provision in this lab:

export CONTAINER_NAME="workitems"

export STORAGE_ACCOUNT=$(az storage account create --name "${STORAGE_AC-

8. Note: The same storage account will be also used by the Azure function to facilitate its own process-ing requirements. In real-world scenarios, you might want to consider creating a separate storage account for this purpose.

9. From the Cloud Shell pane, run the following to create a variable storing the value of the connection string property of the Azure Storage account:

| ED | 18 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

| CATION}" --properties '{"Application_Type": "other", "ApplicationId": | ||

|

||

| NAME}" --query "properties.InstrumentationKey" --resource-group "${RE- | ||

|

||

| 11. From the Cloud Shell pane, run the following to create the Azure Function that will process events | ||

|

||

|

||

|

||

| source-group "${RESOURCE_GROUP_NAME}" --settings "STORAGE_CONNECTION_ | ||

|

||

|

||

|

||

| 17. On the Extensions not Installed blade, click Install and wait until the installation of the extension | ||

19. On the Azure Blob Storage trigger blade, specify the following and click Create to create a new function within the Azure function:

● Name: BlobTrigger

21. Note: By default, the function is configured to simply log the event corresponding to creation of a new blob. In order to carry out blob processing tasks, you would modify the content of this file.

Task 2: Validate an Azure Function App Storage Blob trigger

export CONTAINER_NAME="workitems"

3. From the Cloud Shell pane, run the following to upload a test blob to the Azure Storage account you created earlier in this task:

4. From the Cloud Shell pane, run the following to create an Azure Storage account and its container that will be used by the Event Grid subscription that you will configure in this task:

export STORAGE_ACCOUNT_NAME="az300t06st3${PREFIX}"

export STORAGE_ACCOUNT_ID=$(az storage account show --name "${STORAGE_AC-COUNT_NAME}" --query "id" --resource-group "${RESOURCE_GROUP_NAME}" -o tsv)

6. From the Cloud Shell pane, run the following to create the Storage Account queue that will store messages generated by the Event Grid subscription that you will configure in this task:

az eventgrid event-subscription create --name "${QUEUE_SUBSCRIPTION_NAME}" --included-event-types 'Microsoft.Storage.BlobCreated' --endpoint "${STOR-AGE_ACCOUNT_ID}/queueservices/default/queues/${QUEUE_NAME}" --endpoint-type "storagequeue" --source-resource-id "${STORAGE_ACCOUNT_ID}"

Task 2: Validate an Azure Event Grid subscription-based queue messaging

| ED | 22 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | export AZURE_STORAGE_ACCESS_KEY="$(az storage account keys list --ac- | |

|

||

|

||

|

||

|

||

| 5. Note that the queue contains a single message. Click its entry to display the Message properties | ||

|

||

|

||

|

||

|

||

| az group delete --name "${RESOURCE_GROUP_NAME}" --no-wait --yes | ||

Azure Batch

You are designing a video editing solution. The solution will require extension hardware resources to perform require processing on large video files.

Messaging

You manage an e-Commerce solution that uses Azure Batch.

Azure Batch uses a message queue to implement the communication channel between the application and the instances of the consumer service. The application posts requests in the form of messages to the queue, and the consumer service instances receive messages from the queue and process them. This approach enables the same pool of consumer service instances to handle messages from any instance of the application.

Azure Service Bus

| ED | 24 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

SendGrid provides transactional email delivery, scalability based on email volume, and real-time analytics for the sent messages. SendGrid also has a flexible API to enable custom integration scenarios.

Sending emails through SendGrid by using C#

SendGridMessage message = new SendGridMessage()

{

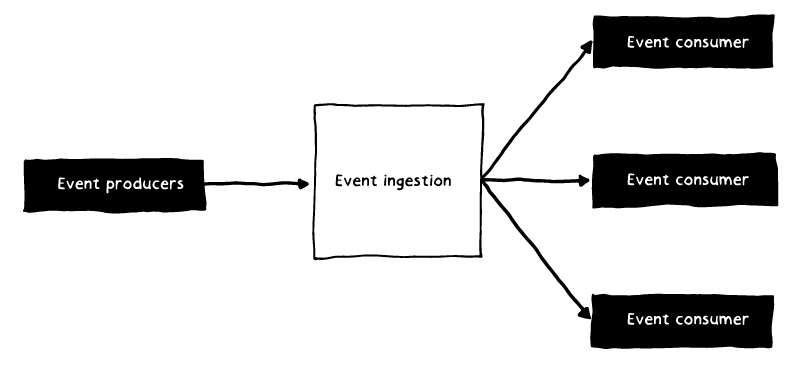

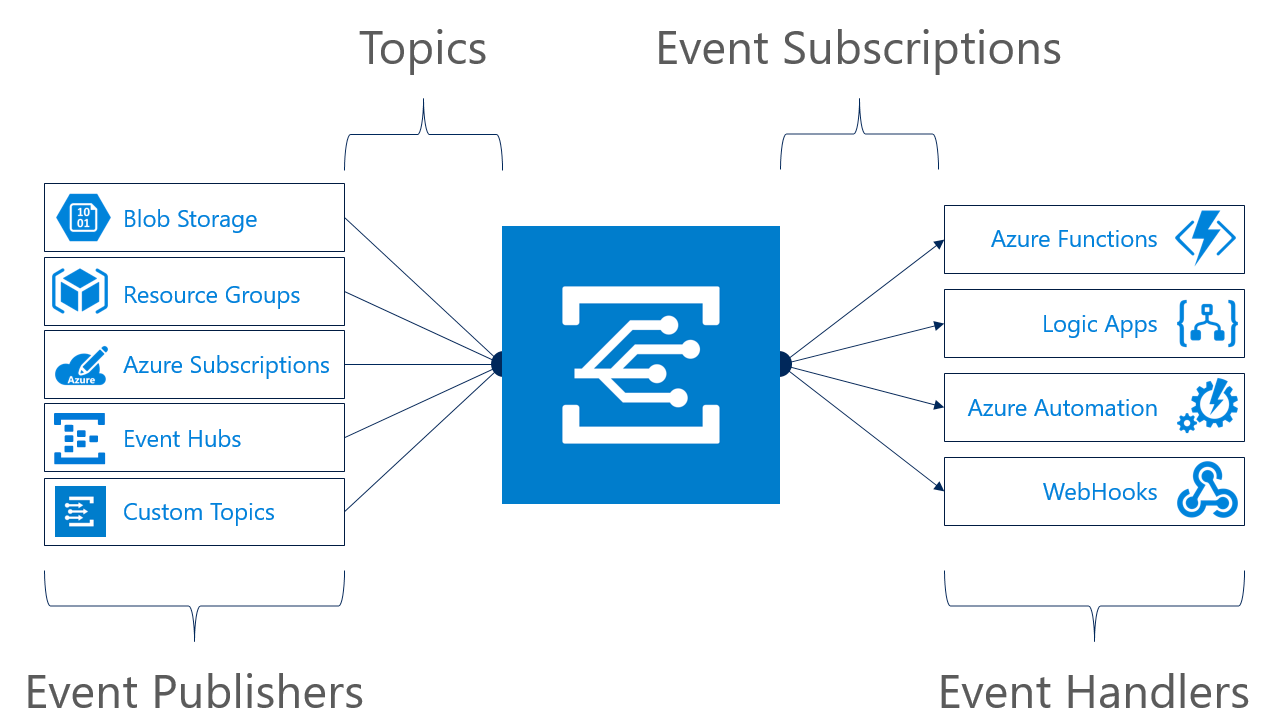

Modern application requirements stipulate that applications we build should be able to handle a high volume and velocity of data, process that data in real time, and allow multiple systems to respond to the same data. If we were to build applications in a serial manner, this stipulation would be incredibly difficult to meet. To help handle these application scenarios, many modern systems are built using an architectural style referred to as event-driven

architecture. An event-driven architecture consists of event producers that generate a stream of events and event consumers that listen for the events.

● Event stream processing. You use a data streaming platform, such as Azure IoT Hub or Apache Kafka, as a pipeline to ingest events and feed them to stream processors. The stream processors act to process or transform the stream. There may be multiple stream processors for different subsystems of

| ED | 28 |

|

|

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | |||

|

|||

|

|||

|

|||

|

|||

| ● | |||

| ● |

|

||

| ● |

|

||

| ● | |||

| ● | |||

| ● | |||

| ● | |||

| ● |

|

||

| ● |

|

||

Subscribing to Blob storage events using Azure CLI

Event Grid connects data sources and event handlers. For example, an Event Grid can instantly trigger a serverless function to run an image analysis each time a new photo is added to a Blob storage container. In this example, we will create an event source using Blob storage.

az storage account create --name demostor --location eastus --re-

source-group

| ED | 30 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

Configure the Azure Relay service 31

Configure the Azure Relay service

Note: The Azure Relay capabilities differ from network-level integration technologies, such as virtual private network (VPN) technology, in that Azure Relay can be scoped to a single application endpoint on a single machine, while VPN technology is far more intrusive, as it relies on altering the network environ-ment.

Hybrid Connections

const WebSocket = require('hyco-ws');

var listenUri =

The two classes are mostly contract compatible, meaning that an existing

application using the ws.Server class can easily be changed to use the

relayed version. The main differences are in the constructor and in the

available options. The RelayedServer constructor supports a different set of arguments than the Server, because it is not a standalone listener or able

to be embedded into an existing HTTP listener framework. There are also fewer options available, since the WebSocket protocol management is largely delegated to the Azure Relay service:var server = ws.RelayedServer;

});

The RelayedServer constructor has two required arguments to establish a connection over the WebSocket protocol using Azure Relay:

| ED | 34 | ||

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | |||

|

|||

|

|||

| ● | |||

| ● |

|

||

| ● |

|

||

| ● | |||

| ● | |||

| ● |

|

||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

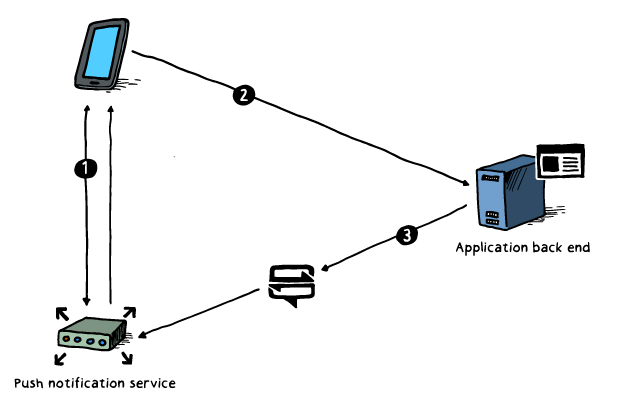

Configuring Notification Hubs in iOS

| ED | 36 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

|

|

||

|

||

|

||

|

||

|

||

|

||

|

||

| notificationHubPath:HUB- | ||

|

||

|

||

| NSLog(@"Error registering for notifications: %@", error); | ||

| [self MessageBox:@"Registration Status" message:@"Regis- | ||

|

||

|

||

| -(void)MessageBox:(NSString *) title message:(NSString *)messageText | ||

| cancelButtonTitle:@"OK" otherButtonTitles: nil]; | ||

|

Create and configure a notification hub 37

● You must register the com.google.firebase.iid.FirebaseInstanceIdReceiver receiver to enable PNS registration and message receipt.

In most examples, you would first add a few constants to a C# class that will contain important connection details for your notification hub:

public override void OnTokenRefresh()

{

var refreshedToken = FirebaseInstanceId.Instance.Token; Log.Debug(TAG, "FCM token: " + refreshedToken);

SendRegistrationToServer(refreshedToken);

}void SendRegistrationToServer(string token)

{

hub = new NotificationHub(Constants.NotificationHubName,

Constants.ListenConnectionString, this);

Create and configure a notification hub 39

Sending messages from an application back end to Notification Hubs using C#

var alert = @"{"aps": {"alert": "From contoso-admin: Hello World"}}";

await hub.SendAppleNativeNotificationAsync(alert);var notif = @"{"data": {"message":"From contoso-admin: Hello World"}}";

await hub.SendGcmNativeNotificationAsync(notif);

}

}

| ED | 40 |

|

|

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

| ● | |||

| ● | |||

|

|||

| ● |

|

||

| ● | |||

|

|||

| ● |

|

||

Azure IoT Hub

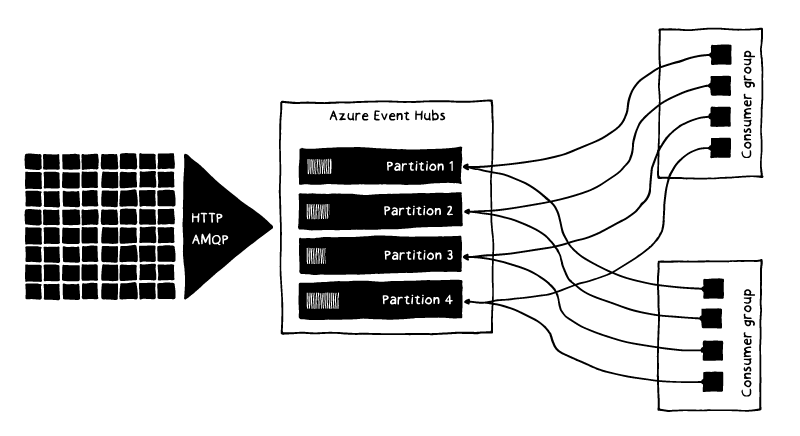

Azure provides services specifically developed for diverse types of connectivity and communication to help you connect your data to the power of the cloud. Both Azure IoT Hub and Azure Event Hubs are cloud services that can ingest large amounts of data and process or store that data for business insights. The two services are similar in that they both support the ingestion of data with low latency and high reliability, but they are designed for different purposes. IoT Hub was developed specifically to address the unique requirements of connecting IoT devices—at scale—to Azure Cloud Services, while Event Hubs was designed for big data streaming. This is why Microsoft recommends using Azure IoT Hub to connect IoT devices to Azure.

Azure IoT Hub is the cloud gateway that connects IoT devices to gather data to drive business insights and automation. In addition, IoT Hub includes

Whether an application or service runs in the cloud or on-premises, it often needs to interact with other applications or services. Different situations call for different styles of communication. Sometimes, letting applications send and receive messages through a simple queue is the best solution. In other

situations, an ordinary queue isn't enough; a queue with a publish-and-subscribe mechanism is better. In some cases, all that's needed is a connection between applications, and queues are not required.To provide a broadly useful way to do this, Azure offers Azure Service Bus. Azure Service Bus provides all three options, enabling your applications to interact in several different ways. Service Bus is a multitenant cloud service, which means that the service is shared by multiple users.

When you create a queue, topic, or relay, you give it a name. Combined with whatever you called your namespace, this name creates a unique identifier for the object. Applications can provide this name to Service Bus and then use that queue, topic, or relay to communicate with each other.

Service Bus queues

| ED | 44 | ||

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | |||

| ● | |||

|

|||

| ● |

|

||

| ● | |||

| ● |

|

||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

| ● | |||

|

|||

| ● |

|

||

| ● | |||

|

|||

Create and configure a service bus 45

require "azure"

To create a connection to Service Bus using the client object, use the following code to set the values of the namespace, key name, key, signer, and host:

The following example demonstrates how to send a test message to the queue named test-queue using send_queue_message():

message = Azure::ServiceBus::BrokeredMessage.new("test queue message")

Microsoft Graph

Microsoft Graph is the gateway to data and intelligence in Microsoft 365. Microsoft Graph provides a unified programmability model that you can use to query and manipulate data in Microsoft Office 365, Microsoft Enterprise Mobility

● Windows 10 services: activities and devices

Microsoft Graph connects all the resources across these services using

relationships. For example, a user can be connected to a group through a memberOf relationship and to another user through a manager relationship. Your app can traverse these relationships to access these connected resources and perform actions on them through the API.

|

|

|

|

Many personal computers and workstations have several CPU cores that enable the simultaneous execution of multiple threads. As client devices increase in performance and server computers opt for horizontal over vertical scaling, it's becoming increasingly common for a local client device to have more computing power and threads than the server it is currently accessing. Shortly, computers are expected to have even more cores, and this divide will continue to grow. It is more critical now than ever to take advantage of parallelism in client devices, server-side computers, and cloud-managed computing.

Parallelism

| ED | 52 | ||

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

||

|

|||

| ● | |||

| ● | |||

| ● | |||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

| the tracking of the asynchronous methods. The keyword and its abstraction makes your code more | |||

|

|||

|

|||

| string greeting = $"You are running {Environment.OSVersion}"; | |||

|

|||

|

|||

Implement Azure Functions and Azure Logic Apps 53

Implement Azure Functions and Azure Logic Apps

Azure Functions integrates with various Azure and third-party services. These services can trigger your function and start execution, or they can serve as input and output for your code. Azure Functions supports the following service integrations:

{

"disabled": false,

| ED | 54 | ||

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

| ● | |||

| ● |

|

||

| ● |

|

||

|

|||

|

|||

| trigger that has the built-in criterion "When a record is updated." If the trigger detects an event that | |||

|

|||

| portal through your browser and in Visual Studio. You can also use Azure PowerShell commands and | |||

|

|||

| logic app definitions in JavaScript Object Notation (JSON) by working in code view mode. Here is an | |||

}

},

"actions": {

"readData": {

"type": "Http",

"inputs": {

"method": "GET",

"uri": "@parameters('uri')"}

}

},

"outputs": { }

}4. In the New blade, in the Search the Marketplace box, enter function app, and then press Enter.

5. In the search results, select the Function App template.

3. In the OS group, select Windows.

4. In the Hosting Plan list, select Consumption Plan.

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log)

{

log.Info("C# HTTP trigger executed");

return req.CreateResponse(

await req.Content.ReadAsAsync<object>()

);

}18. Note: This script will echo the body of a request back to the calling client.

2. Replace the request body field's value with the following JSON object:

{

"sizeSystem": "US",

"sizeType": "regular",

"targetCountry": "US",

"taxes": [

{

"country": "US",

"rate": "9.9",

"region": "CA",

"taxShip": "True"

}

],

"title": "Cute Toddler Sundress"

}7. At the top of the FUNCDEMO blade, select Delete resource group.

8. In the deletion confirmation dialog box, enter the name FUNCDEMO, and then select Delete.

| ED | 58 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

| to implement the object-relational mapper, because Entity Framework–specific code exists directly in |

your web application. Things might be even worse if you chose to use a different database service altogether.

Second, you might observe I/O blocking while your code uses the synchronous version of the Entity Framework methods in the System.Data.Entity namespace.

public class EntityFrameworkDataLayer : IDataLayer

{

public async Task<string> GetNameForIdAsync(int id) {

ItemsContext context = new ItemsContext(); Item item = await context.Items.FindAsync(id); return item.Name;

}

}public class ItemsController : Controller

{

public async Task<string> Get(IDataLayer dataLayer, int id) {

return await dataLayer.GetNameForIdAsync(id);

}

}

| ED | 62 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

|

|

||

| The solution must route messages to manufacturing, create orders in a sales system, and notify your | ||

|

||

|

||

|

||

|

||

| How can you ensure that a user request does not affect the experience of other users? Which design | ||

|

||

|

||

|

||

|

||

| design patterns. For this scenario, unpredictable bursting is most appropriate. Solutions that use this | ||

|

handle increased workloads and then released when no longer necessary.

In distributed application scenarios, you are often advised to scale out your application horizontally by adding multiple, small instances of your application to the cloud. With horizontal scaling, you can serve more client devices and ensure high availability and resiliency against any specific fault.

Unfortunately, application workloads are unpredictable. To illustrate this, here are four of the most common computing patterns you will see for web applications hosted in the cloud:

|

||

| ED | 66 |

|

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

|

||

|

||

|

||

| A primary advantage of the cloud is elastic scaling—the ability to use as much capacity as you need, | ||

| scaling out as load increases and scaling in when the extra capacity is not needed. In the context of | ||

|

||

| cloud service can scale out and in to exactly match the amount of instances needed for your specific | ||

|

||

|

||

|

||

|

||

|

|---|

| ED | 70 |

|

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

|

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

Implement code that addresses singleton application instances 71

{

"clientId": "b52dd125-9272-4b21-9862-0be667bdf6dc",

"clientSecret": "ebc6e170-72b2-4b6f-9de2-99410964d2d0",

"subscriptionId": "ffa52f27-be12-4cad-b1ea-c2c241b6cceb",

"tenantId": "72f988bf-86f1-41af-91ab-2d7cd011db47",

"activeDirectoryEndpointUrl": "https://login.microsoftonline.com", "resourceManagerEndpointUrl": "https://management.azure.com/", "activeDirectoryGraphResourceId": "https://graph.windows.net/", "sqlManagementEndpointUrl": "https://management.core.windows.net:8443/",

"galleryEndpointUrl": "https://gallery.azure.com/",

"managementEndpointUrl": "https://management.core.windows.net/" }azure.VirtualMachines

The properties have both synchronous and asynchronous versions of methods to perform actions such as Create, Delete, List, and Get. If we wanted to get a list of VMs asynchronously, we could use the ListA-sync method:

Implement code that addresses a transient state 73

Handling transient errors

In the cloud, transient faults aren't uncommon, and an application should be designed to handle them elegantly and transparently. This minimizes the effects faults can have on the business tasks the applica-tion is performing.

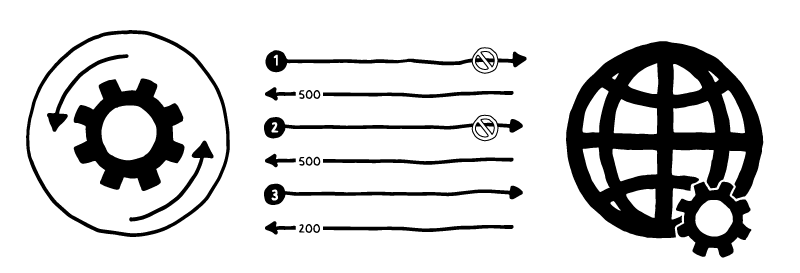

For the more common transient failures, the period between retries should be chosen to spread requests from multiple instances of the application as evenly as possible. This reduces the chance of a busy service continuing to be overloaded. If many instances of an application are continually overwhelming a service with retry requests, it'll take the service longer to recover.

If the request still fails, the application can wait and make another attempt. If necessary, this process can be repeated with increasing delays between retry attempts, until some maximum number of requests have been attempted. The delay can be increased incrementally or exponentially depending on the type of failure and the probability that it'll be corrected during this time.

| ED | 74 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

| 2. The application waits for a short interval and tries again. The request still fails with HTTP response | ||

|

||

| The application should wrap all attempts to access a remote service in code that implements a retry | ||

|

||

|

||

| es. You can reduce the frequency of these faults by scaling out the service. For example, if a database | ||

| service is continually overloaded, it might be beneficial to partition the database and spread the load | ||

|

||

| method, shown below, invokes an external service asynchronously through the TransientOperation- | ||

|

||

|

||

|

||

|

The statement that invokes this method is contained in a try/catch block wrapped in a for loop. The for loop exits if the call to the TransientOperationAsync method succeeds without throwing an exception. If the TransientOperationAsync method fails, the catch block examines the reason for the failure. If it's believed to be a transient error, the code waits for a short delay before retrying the operation.

The for loop also tracks the number of times that the operation has been attempted, and if the code fails three times, the exception is assumed to be more long lasting. If the exception isn't transient or it's long lasting, the catch handler will throw an exception. This exception exists in the for loop and should be caught by the code that invokes the OperationWithBasicRetryAsync method.

| Review Questions 77 |

|---|

Auto-scale metrics

You are designing a solution for a university. Students will use the solution to register for classes. You plan to implement elastic scaling to handle the high levels of activity at the beginning of each class registration period.You plan to implement an App Service instance and trigger auto-scaling based on metrics?

What options are available to handle transient errors?

Suggested Answer ↓

When an application loses a connection to a resource such as a database, you can use cancel, retry, or retry with delay logic to handle and potentially resolve the issue. In this case, the database may become unavailable due to network issues. Alternatively, the database may be busy with maintenance tasks. You can implement retry logic to reconnect to the database.Getting started with free trials

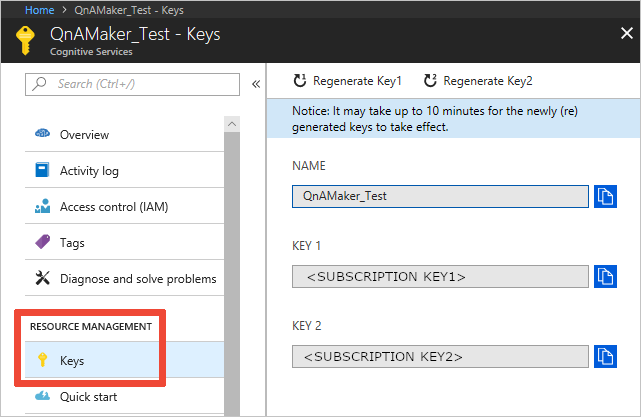

Signing up for free trials only takes an email and a few simple steps1 You will need a Microsoft Account if you don't already have one. You will receive a unique pair of keys for each API requested. The second one is just a spare. Please do not share the secret keys with anyone. Trials have both rate limit, in terms of transactions per second or minute, and a monthly usage cap. A transaction is simply an API call. You can upgrade to paid tiers to unlock the restrictions.

| ED | 80 |

|

|

|---|---|---|---|

|

|||

|

|||

|

|||

| ● | |||

| ● | |||

| ● | |||

| ● |

|

||

|

|||

|

|||

|

|||

| After uploading an image or specifying an image URL, Computer Vision API's algorithms output tags | |||

|

|||

|

|||

|

|||

| 3 | |||

|

|||

There are several ways to categorize images. Computer Vision API can set a boolean flag to indicate whether an image is black and white or color. The API can also set a flag to indicate whether an image is a line drawing or not. It can also indicate whether an image is clip art or not and indicate its quality on a scale of 0-3.

Domain-specific content

Analyze only a chosen model by invoking an HTTP POST call. If you know which model you want to use, specify the model's name. You only get information relevant to that model. For example, you can use this option to only look for celebrity-recognition. The response contains a list of potential matching celebri-ties, accompanied by their confidence scores.

Requirements for OCR:

● The size of the input image must be between 40 x 40 and 3200 x 3200 pixels.● The image cannot be bigger than 10 megapixels.

|

|---|

Text recognition saves time and effort. You can be more productive by taking an image of text rather than transcribing it. Text recognition makes it possible to digitize notes. This digitization allows you to imple-ment quick and easy search. It also reduces paper clutter.

Input requirements:

● Supported image formats: JPEG, PNG, and BMP.

Develop Solutions using Computer Vision 85

● Click the Browse tab, and in the Search box type “Microsoft.Azure.CognitiveServices.Vision. ComputerVision”.

● Select Microsoft.Azure.CognitiveServices.Vision.ComputerVision when it displays, then click the checkbox next to your project name, and Install.

7. Optionally, set remoteImageUrl to a different image.

8. Run the program.

private const string remoteImageUrl =

"http://upload.wikimedia.org/wikipedia/commons/3/3c/Shaki_wa-terfall.jpg";// Specify the features to return

private static readonly List<VisualFeatureTypes> features =

new List<VisualFeatureTypes>()

{

VisualFeatureTypes.Categories, VisualFeatureTypes.Description, VisualFeatureTypes.Faces, VisualFeatureTypes.ImageType,

VisualFeatureTypes.Tags

| ED | 86 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

| new ApiKeyServiceClientCredentials(subscriptionKey), | ||

| new System.Net.Http.DelegatingHandler[] { }); | ||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

| Console.WriteLine( | ||

| "\nInvalid remoteImageUrl:\n{0} \n", imageUrl); | ||

| return; | ||

| await computerVision.AnalyzeImageAsync(imageUrl, features); | ||

|

||

|

||

using (Stream imageStream = File.OpenRead(imagePath))

{

ImageAnalysis analysis = await computerVision.AnalyzeImage-InStreamAsync(

imageStream, features);

DisplayResults(analysis, imagePath);

}

}// Display the most relevant caption for the image

private static void DisplayResults(ImageAnalysis analysis, string imageUri)

{

Console.WriteLine(imageUri);

Console.WriteLine(analysis.Description.Captions[0].Text + "\n");

}

}

}The following information shows you how to generate a thumbnail from an image using the Computer Vision Windows client library.

Prerequisites

| ED | 88 | |||

|---|---|---|---|---|

| T USE ONLY. STUDENT USE PROHIBIT | ● | |||

|

||||

|

||||

| input image. Computer Vision uses smart cropping to intelligently identify the region of interest and | ||||

|

||||

|

||||

| ● | ||||

| ● | ||||

|

||||

| ● |

|

|||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

| 8 | ||||

| MC | ||||

| 9 |

|

|||

Develop Solutions using Computer Vision 89

static void Main(string[] args)

{

ComputerVisionAPI computerVision = new ComputerVisionAPI( new ApiKeyServiceClientCredentials(subscriptionKey), new System.Net.Http.DelegatingHandler[] { });// You must use the same region as you used to get your sub-scription

// keys. For example, if you got your subscription keys from westus,

// replace "Westcentralus" with "Westus".// Create a thumbnail from a remote image

private static async Task GetRemoteThumbnailAsync(

ComputerVisionAPI computerVision, string imageUrl)

{

if (!Uri.IsWellFormedUriString(imageUrl, UriKind.Absolute)) {

Console.WriteLine(

"\nInvalid remoteImageUrl:\n{0} \n", imageUrl);

Thumbnail written to: C:\Documents\LocalImage_thumb.jpg

Thumbnail written to: ...\bin\Debug\Bloodhound_Puppy_thumb.jpg

● Any edition of Visual Studio 2015 or 2017.

● The Microsoft.Azure.CognitiveServices.Vision.ComputerVision11 client library NuGet package. It isn't necessary to download the package. Installation instructions are provided below.

|

|---|

//

// Free trial subscription keys are generated in the westcen-tralus

// region. If you use a free trial subscription key, you shouldn't

// need to change the region.// Specify the Azure region

computerVision.AzureRegion = AzureRegions.Westcentralus;await GetTextAsync(computerVision, textHeaders.OperationLoca-tion);

| ED | 94 |

|

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

| private static async Task ExtractLocalHandTextAsync( | ||

|

||

|

||

| Console.WriteLine( | ||

| "\nUnable to open or read localImagePath:\n{0} \n", | ||

| return; | ||

| // Start the async process to recognize the text | ||

| RecognizeTextInStreamHeaders textHeaders = | ||

| await computerVision.RecognizeTextInStreamAsync( | ||

| imageStream, TextRecognitionMode.Handwritten); | ||

| await GetTextAsync(computerVision, textHeaders.OperationLo- | ||

|

||

|

||

|

||

|

||

| // stored from the Operation-Location header | ||

| operationLocation.Length - numberOfCharsInOperationId); | ||

| await computerVision.GetTextOperationResultAsync(operation- | ||

|

||

|

||

| result.Status == TextOperationStatusCodes.NotStarted) | ||

|

||

| Console.WriteLine( | ||

| "Server status: {0}, waiting {1} seconds...", result. | ||

|

RecognizeTextAsync response

A successful response displays the lines of recognized text for each image.

Develop Solutions using Bing Web Search 97

|

|

|

|

|

|

|

Develop a Bing Web Search query in C#

const string searchTerm = "Microsoft Cognitive Services";

// Used to return search results including relevant headers struct SearchResult

{

public String jsonResult;

public Dictionary<String, String> relevantHeaders; }

Console.WriteLine("\nJSON Response:\n");

Console.WriteLine(JsonPrettyPrint(result.jsonResult)); }

else

{

Console.WriteLine("Invalid Bing Search API subscription key!");

Console.WriteLine("Please paste yours into the source code.");

}Console.Write("\nPress Enter to exit ");

Console.ReadLine();

}

| ED | 100 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

| // Perform the Web request and get the response | ||

|

||

|

||

| jsonResult = json, | ||

| relevantHeaders = new Dictionary<String, String>() | ||

|

||

|

||

| if (header.StartsWith("BingAPIs-") || header.StartsWith("X- | ||

| searchResult.relevantHeaders[header] = response.Head- | ||

|

||

|

||

|

||

|

||

| return string.Empty; | ||

|

||

|

||

Develop Solutions using Bing Web Search 101

foreach (char ch in json)

{

switch (ch)

{

case '"':

if (!ignore) quote = !quote;

break;

case '\\':

if (quote && last != '\\') ignore = true; break;

}

| ED | 104 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

|

||

|

||

|

||

| "text": "microsoft cognitive services text analytics", | ||

|

||

|

||

| "displayText": "microsoft cognitive services toolkit", | ||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

Develop Solutions using Bing Web Search 105

Searches"

}

}

]

}

}

}GET https://api.cognitive.microsoft.com/bing/v7.0/search?q=sailing+din-ghies&responseFilter=images%2Cvideos%2Cnews&mkt=en-us HTTP/1.1

Ocp-Apim-Subscription-Key: 123456789ABCDE

User-Agent: Mozilla/5.0 (compatible; MSIE 10.0; Windows Phone 8.0; Tri-dent/6.0; IEMobile/10.0; ARM; Touch; NOKIA; Lumia 822)

X-Search-ClientIP: 999.999.999.999

X-Search-Location: 47.60357;long:-122.3295;re:100

X-MSEdge-ClientID: <blobFromPriorResponseGoesHere>

Develop Solutions using Bing Web Search 107

GET https://api.cognitive.microsoft.com/bing/v7.0/search?q=sailing+din-ghies&answerCount=2&mkt=en-us HTTP/1.1

Ocp-Apim-Subscription-Key: 123456789ABCDE

User-Agent: Mozilla/5.0 (compatible; MSIE 10.0; Windows Phone 8.0; Tri-dent/6.0; IEMobile/10.0; ARM; Touch; NOKIA; Lumia 822)

X-Search-ClientIP: 999.999.999.999

X-Search-Location: 47.60357;long:-122.3295;re:100

X-MSEdge-ClientID: <blobFromPriorResponseGoesHere>

Host: api.cognitive.microsoft.comThe response includes only webPages and images.

| ED | 108 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

|

|

||

|

||

|

||

|

||

|

||

| The following is the response to the above request. Bing returns the top two answers, webpages and | ||

|

||

|

||

|

||

|

||

| If you set promote to news, the response doesn't include the news answer because it is not a ranked | ||

|

||

|

The Custom Speech Service enables you to create customized language models and acoustic models tailored to your application and your users. By uploading your specific speech and/or text data to the Custom Speech Service, you can create custom models that can be used in conjunction with Microsoft’s existing state-of-the-art speech models.

For example, if you’re adding voice interaction to a mobile phone, tablet or PC app, you can create a custom language model that can be combined with Microsoft’s acoustic model to create a speech-to-text endpoint designed especially for your app. If your application is designed for use in a particular environ-ment or by a particular user population, you can also create and deploy a custom acoustic model with this service.

Both the acoustic and language models are statistical models learned from training data. As a result, they perform best when the speech they encounter when used in applications is similar to the data observed during training. The acoustic and language models in the Microsoft Speech-To-Text engine have been trained on an enormous collection of speech and text and provide state-of-the-art performance for the most common usage scenarios, such as interacting with Cortana on your smart phone, tablet or PC, searching the web by voice or dictating text messages to a friend.

Why use the Custom Speech Service?

| ● |

|---|

● If you have background noise in your data, it is recommended to also have some examples with longer segments of silence, for example, a few seconds, in your data, before and/or after the speech content.

● Each audio file should consist of a single utterance, for example, a single sentence for dictation, a single query, or a single turn of a dialog system.

The transcriptions for all WAV files should be contained in a single plain-text file. Each line of the tran-scription file should have the name of one of the audio files, followed by the corresponding transcription. The file name and transcription should be separated by a tab (\t).

For example:

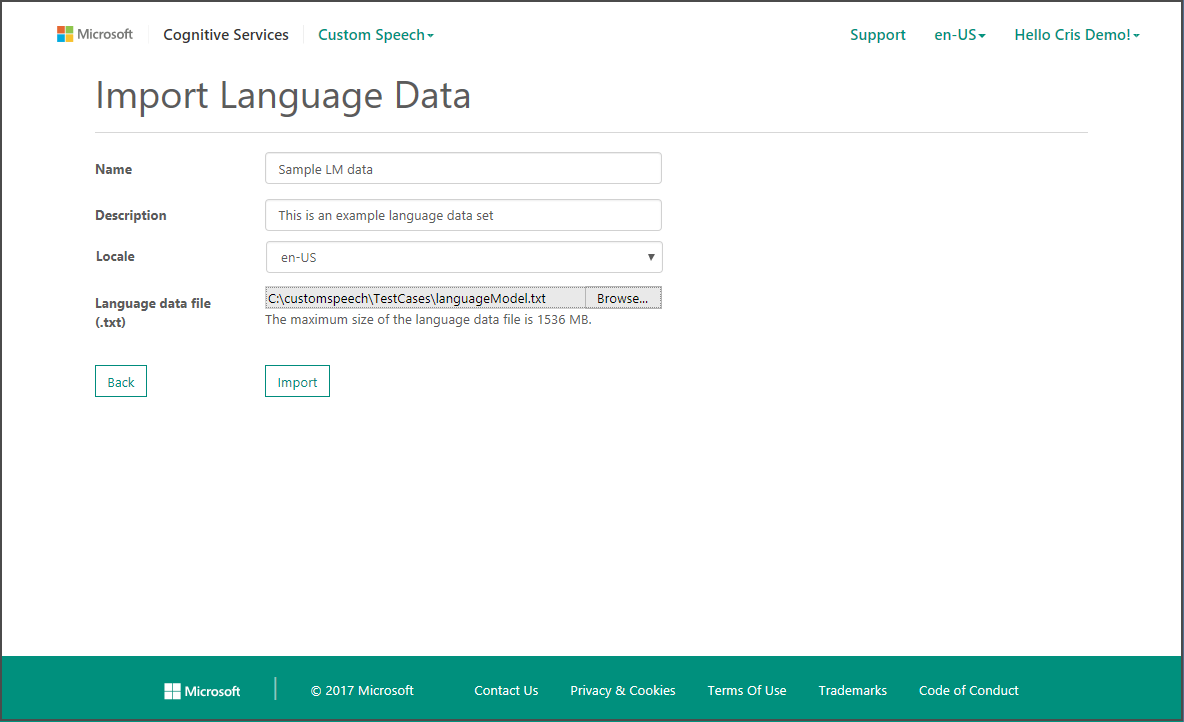

The following steps are done using the Custom Speech Service Portal18.

17 https://docs.microsoft.com/en-us/azure/cognitive-services/custom-speech-service/customspeech-how-to-topics/cognitive-services- custom-speech-transcription-guidelines

18 https://cris.ai/

| ED | 112 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

|

||

|

||

|

||

| also have a status that reflects its current state. Its status will be “Waiting” while it is being queued for | ||

|

||

| When the status is “Complete”, you can click “Details” to see the acoustic data verification report. The | ||

|

||

|

To do so, click “Acoustic Models” in the “Custom Speech” drop-down menu. You will see a table called "Your models” that lists all of your custom acoustic models. This table will be empty if this is your first use.

The current locale is shown in the table title. Currently, acoustic models can be created for US English only.

In this lesson, you learn how to:

● Prepare the data

Ensure that your Cognitive Services account is connected to a subscription by opening the Cognitive Services Subscriptions20 page.

If no subscriptions are listed, you can either have Cognitive Services create an account for you by clicking the Get free subscription button. Or you can connect to a Custom Search Service subscription created in the Azure portal by clicking the Connect existing subscription button.

When the import is complete, you will return to the language data table and will see an entry that corresponds to your language data set. Notice that it has been assigned a unique id (GUID). The data will also have a status that reflects its current state. Its status will be “Waiting” while it is being queued for processing, “Processing” while it is going through validation, and “Complete” when the data is ready for use. Data validation performs a series of checks on the text in the file and some text normalization of the data.

| ED | 118 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

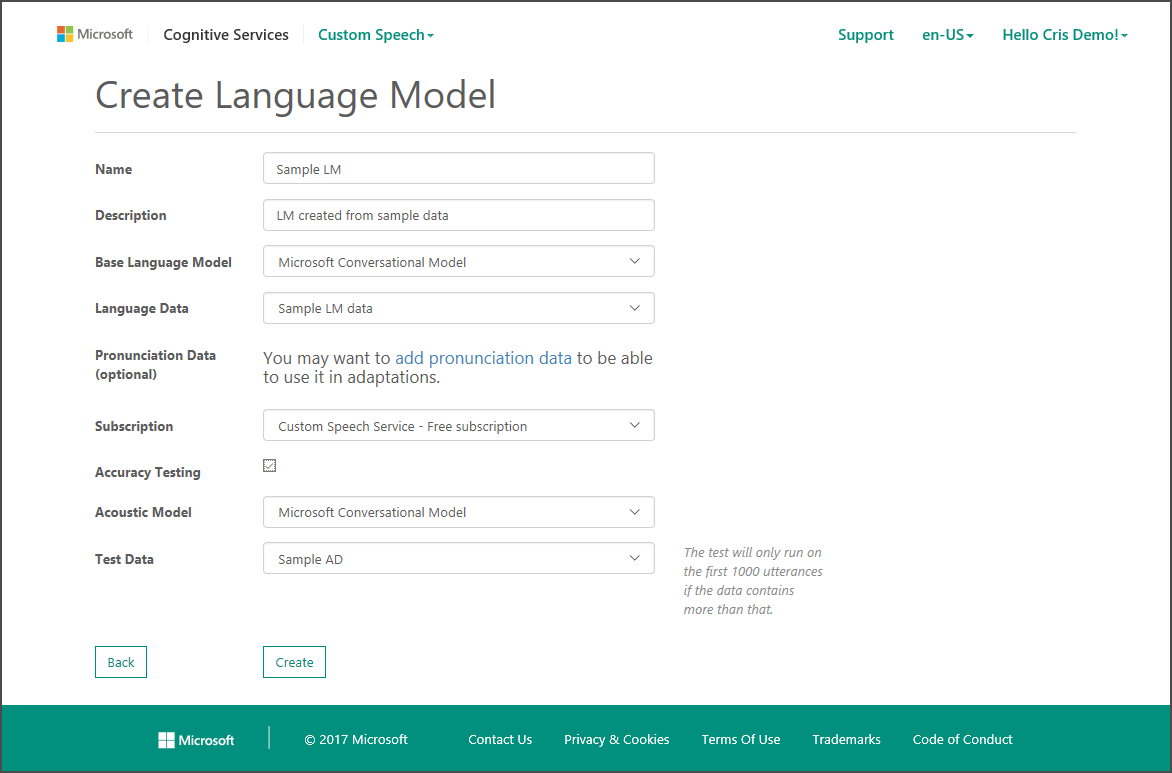

After you have specified the base language model, select the language data set you wish to use for the customization using the “Language Data” drop-down menu

QnA Maker enables you to power a question and answer service from your semi-structured content like FAQ (Frequently Asked Questions) documents or URLs and product manuals. You can build a model of questions and answers that is flexible to user queries, providing responses that you'll train a bot to use in a natural, conversational way.

An easy-to-use graphical user interface enables you to create, manage, train and get your service up and running without any developer experience.

Prerequisites

If your preferred IDE is Visual Studio, you'll need Visual Studio 2017 to run this code sample on Windows. (The free Community Edition will work.)

| ED | 122 | |

|---|---|---|

| paid subscription key from your new API account in your Azure dashboard. To retrieve your key, select | ||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

static string key = "YOUR SUBSCRIPTION KEY HERE";

/// <summary>

/// Defines the data source used to create the knowledge base./// </summary>

public struct Response

{

public HttpResponseHeaders headers;

public string response;

/// </summary>

/// <param name="kb">The data source for the knowledge base.</ param>

/// <returns>A <see cref="System.Threading.Tasks.Task{TResult} (QnAMaker.Program.Response)"/>

/// object that represents the HTTP response."</returns>

/// <remarks>The method constructs the URI to create a knowledge base in QnA Maker, and then

/// asynchronously invokes the <see cref="QnAMaker.Program.Post(string, string)"/> method

/// to send the HTTP request.</remarks>

async static Task<Response> PostCreateKB(string kb) {

// Builds the HTTP request URI.return await Post(uri, kb);

}/// <summary>

/// Gets the status of the specified QnA Maker operation.

| ED | 126 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

| async static Task<Response> GetStatus(string operation) | ||

|

||

|

||

|

||

|

||

|

||

|

||

| // Starts the QnA Maker operation to create the knowledge | ||

|

||

| var response = await PostCreateKB(kb); | ||

| // Retrieves the operation ID, so the operation's status | ||

|

||

| // checked periodically. | ||

| var operation = response.headers.GetValues("Location"). | ||

| // Displays the JSON in the HTTP response returned by the | ||

| // PostCreateKB(string) method. | ||

| Console.WriteLine(PrettyPrint(response.response)); | ||

| // Iteratively gets the state of the operation creating the | ||

| // knowledge base. Once the operation state is set to | ||

| // than "Running" or "NotStarted", the loop ends. | ||

| var done = false; | ||

| while (true != done) | ||

| { |

// Displays the JSON in the HTTP response returned by the

// GetStatus(string) method.

Console.WriteLine(PrettyPrint(response.response));

The thread is

// paused for a number of seconds equal to the Retry-After header value,

// and then the loop continues.var wait = response.headers.GetValues("Retry-Af-ter").First();

Console.WriteLine("Waiting " + wait + " sec-onds...");

Thread.Sleep(Int32.Parse(wait) * 1000);

}

else

{

// QnA Maker has completed creating the knowledge base.

{

"operationState": "Succeeded",

"createdTimestamp": "2018-06-25T10:30:15Z",

"lastActionTimestamp": "2018-06-25T10:30:51Z",

"resourceLocation": "/knowledgebases/1d9eb2a1-de2a-4709-91b2-f6ea8afb-6fb9",

"userId": "0d85ec294c284197a70cfeb51775cd22",

"operationId": "d9d40918-01bd-49f4-88b4-129fbc434c94"

}

Press any key to continue.Once your knowledge base is created, you can view it in your QnA Maker Portal, My knowledge bases26 page. Select your knowledge base name, for example QnA Maker FAQ, to view.

3. Replace the key value with a valid subscription key.

4. Replace the kb value with a valid knowledge base ID. Find this value by going to one of your QnA Maker knowledge bases28. Select the knowledge base you want to update. Once on that page, find the ‘kdid=’ in the URL as shown below. Use its value for your code sample.

| 5. |

|---|

namespace QnAMaker

26 https://www.qnamaker.ai/Home/MyServices

27 https://westus.dev.cognitive.microsoft.com/docs/services/5a93fcf85b4ccd136866eb37/operations/5ac266295b4ccd1554da7600 28 https://www.qnamaker.ai/Home/MyServices

/// </summary>

/// <param name="s">The JSON to format and indent.</param> /// <returns>A string containing formatted and indented JSON.</ returns>

static string PrettyPrint(string s)

{

return JsonConvert.SerializeObject(JsonConvert.DeserializeOb-ject(s), Formatting.Indented);

}/// <summary>

/// Asynchronously sends a PATCH HTTP request.

| ED | 132 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

|

|

||

|

||

|

||

| using (var request = new HttpRequestMessage()) | ||

| request.Method = HttpMethod.Get; | ||

| request.RequestUri = new Uri(uri); | ||

| request.Headers.Add("Ocp-Apim-Subscription-Key", key); | ||

| var response = await client.SendAsync(request); | ||

| var responseBody = await response.Content.ReadAsStrin- | ||

| return new Response(response.Headers, responseBody); | ||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

/// object that represents the HTTP response."</returns>

/// <remarks>Constructs the URI to get the status of a QnA Maker /// operation, then asynchronously invokes the <see cref="QnAMaker.Program.Get(string)"/>

/// method to send the HTTP request.</remarks>

async static Task<Response> GetStatus(string operation) {

string uri = host + service + operation;

Console.WriteLine("Calling " + uri + ".");

return await Get(uri);

}

var operation = response.headers.GetValues("Location").

First();

response = await GetStatus(operation);

// Displays the JSON in the HTTP response returned by the

// GetStatus(string) method.

Console.WriteLine(PrettyPrint(response.response));

// The console waits for a key to be pressed before closing. Console.ReadLine();

}

}

}Understand what QnA Maker returns

Publish a knowledge base in C#

The following code publishes an existing knowledge base, using the Publish method.

using System;

using System.Collections.Generic;

using System.Linq;29 https://westus.dev.cognitive.microsoft.com/docs/services/5a93fcf85b4ccd136866eb37/operations/5ac266295b4ccd1554da7600 30 https://www.qnamaker.ai/Home/MyServices

| ED | 136 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

|

||

|

||

|

||

| // NOTE: Replace this with a valid knowledge base ID. | ||

|

||

|

||

|

||

| using (var request = new HttpRequestMessage()) | ||

| request.Method = HttpMethod.Post; | ||

| request.RequestUri = new Uri(uri); | ||

| request.Headers.Add("Ocp-Apim-Subscription-Key", key); | ||

| var response = await client.SendAsync(request); | ||

| if (response.IsSuccessStatusCode) | ||

| { | ||

| return "{'result' : 'Success.'}"; | ||

| } | ||

| else | ||

| { | ||

| return await response.Content.ReadAsStringAsync(); | ||

| } | ||

|

||

|

A successful response is returned in JSON, as shown in the following example:

{

"result": "Success."

}

|

|

|---|

● Store, synchronize, and query device metadata and state information for all your devices.● Set device state either per-device or based on common characteristics of devices.

● Automatically respond to a device-reported state change with message routing integration.

4. In the IoT hub pane, enter the following information for your IoT hub:

| ● |

|

|---|

| 8. |

|---|

9. Select Review + create.

10. Review your IoT hub information, then click Create. Your IoT hub might take a few minutes to create. You can monitor the progress in the Notifications pane.

2. Run the following command to get the device connection string for the device you just registered:

az iot hub device-identity show-connection-string --hub-name {YourIoTHub-Name} --device-id MyDotnetDevice --output table

| Review Questions 143 |

|---|

Review Questions

What criteria must the images meet to use Computer Vision API v2?

Suggested Answer ↓

Suggested Answer ↓

To use the Computer Vision API, you must procure a subscription key. You can use Visual Studio 2015 or 2017 to develop solutions that use the API. You must import the Microsoft.Azure.CognitiveServices.Vision. ComputerVision client library NuGet package into your Visual Studio solution.

Module 6 Module Develop for Azure Storage

Develop Solutions that use Azure Cosmos DB Storage

Global replication

Azure Cosmos DB has a feature referred to as turnkey global distribution that automatically replicates data to other Azure datacenters across the globe without the need to manually write code or build a replica-tion infrastructure.

| ED | 146 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

|

||

|

||

| The consistency levels range from very strong consistency—where reads are guaranteed to be visible | ||

Consider the following points if your application is built by using Cosmos DB SQL API or Table API

● For many real-world scenarios, session consistency is optimal and it's the recommended option.

| ED | 148 | ||

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ● | ||

|

|||

| ● |

|

||

| ● | |||

| You may get stronger consistency guarantees in practice. Consistency guarantees for a read operation | |||

|

|||

| ● |

|

||

| ● | |||

| ● | |||

| workload. For example, if there are no write operations on the database, a read operation with | |||

|

|||

|

|||

| looking at the Probabilistic Bounded Staleness (PBS) metric. This metric is exposed in the Azure portal. | |||

|

|||

|

|||

| Five consistency models offered by Azure Cosmos DB are natively supported by the Azure Cosmos DB | |||

|

|||

|

|||

|

|||

|

|||

| default consistency level in Azure Cosmos DB. The table shows multi-region and single-region deploy- | |||

The following table shows the “read concerns” mapping between MongoDB 3.4 and the default consist-ency level in Azure Cosmos DB. The table shows multi-region and single-region deployments.

|

||

|---|---|---|

|

||

|

||

|

Azure Cosmos DB Supported APIs

The Table API in Azure Cosmos DB is a key-value database service built to provide premium capabilities (for example, automatic indexing, guaranteed low latency, and global distribution) to existing Azure Table storage applications without making any app changes.

Gremlin API

| ● |

|

|---|

(RUs) per second so that the import tools are not throttled. The throughput can be reverted back to the typical values after the import is complete.

Azure Cosmos DB containers can be created as fixed or unlimited in the Azure portal. Fixed-size contain-ers have a maximum limit of 10 GB and a 10,000 RU/s throughput. To create a container as unlimited, you must specify a partition key and a minimum throughput of 1,000 RU/s. Azure Cosmos DB containers can also be configured to share throughput among the containers in a database.

If you created a fixed container with no partition key or a throughput less than 1,000 RU/s, the container will not automatically scale. To migrate the data from a fixed container to an unlimited container, you need to use the data migration tool or the Change Feed library.

A physical partition is a fixed amount of reserved solid-state drive (SSD) backend storage combined with a variable amount of compute resources (CPU and memory). Each physical partition is replicated for high availability. A physical partition is an internal concept of Azure Cosmos DB, and physical partitions are transient. Azure Cosmos DB will automatically scale the number of physical partitions based on your workload.

A logical partition is a partition within a physical partition that stores all the data associated with a single partition key value. Partition ranges can be dynamically subdivided to seamlessly grow the database as the application grows while simultaneously maintaining high availability. When a container meets the

| ED | 154 | ||

|---|---|---|---|

| partitioning prerequisites, partitioning is completely transparent to your application. Azure Cosmos DB | |||

| handles distributing data across physical and logical partitions and routing query requests to the right | |||

|

|||

|

|||

| Then, you can create a DocumentClient instance by using the endpoint from your Azure Cosmos DB | |||

|

|||

|

|||

| To reference any resource in the software development kit (SDK), you will need a URI. The UriFactory | |||

|

1 |

|

|

If you want to query the database, you can perform SQL queries by using the SqlQuerySpec class:

var query = client.CreateDocumentQuery<Family>(

collectionUri,

new SqlQuerySpec()

{

QueryText = "SELECT * FROM f WHERE (f.surname = @lastName)", Parameters = new SqlParameterCollection()

{

new SqlParameter("@lastName", "Andt")

}

},

DefaultOptions

);

Develop Solutions that use a Relational Database 157

interruption. SQL Database has additional features that are not available in SQL Server, such as built-in intelligence and management. Azure SQL Database offers several deployment options:

The main differences between these options are listed in the following table:

A database copy is a snapshot of the source database as of the time of the copy request. You can select the same server or a different server, its service tier and compute size, or a different compute size within the same service tier (edition). After the copy is complete, it becomes a fully functional, independent database. At this point, you can upgrade or downgrade it to any edition. The logins, users, and permis-sions can be managed independently.

Note: Automated database backups are used when you create a database copy.

After the copying succeeds and before other users are remapped, only the login that initiated the copying, the database owner, can log in to the new database.

Copy a database by using the Azure portal

| ED | 160 | |

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | ||

|

||

|

||

|

||

|

||

| initiated the database copy becomes the database owner of the new database and is assigned a new | ||

|

||

|

||

| impedance mismatch between the relational and object-oriented worlds. The goal of the library is to | ||

|

Develop Solutions that use a Relational Database 161

The Entity Framework provider model allows Entity Framework to be used with different types of data-base servers. For example, one provider can be plugged in to allow Entity Framework to be used against Microsoft SQL Server, whereas another provider can be plugged in to allow Entity Framework to be used against Oracle Database. There are many current providers in the market for databases, including:

SQL Server provider

This database provider allows Entity Framework Core to be used with Microsoft SQL Server (including Microsoft Azure SQL Database). The provider is maintained as an open-source project as part of the Entity Framework Core repository on GitHub (https://github.com/aspnet/EntityFrameworkCore).

}

Logically, our database has a table that is a collection of these blog instances. Without knowing anything about Entity Framework, we would probably create a class that looks like this:

protected override void OnModelCreating(ModelBuilder modelBuilder) {

modelBuilder.Entity<Blog>()

.HasKey(c => c.BlogId)

.Property(b => b.Url)

.IsRequired()

.Property(b => b.Description);

}Data annotations

| ED | 164 |

|

|

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

||

| After you have a model, the primary class your application interacts with is System.Data.Entity. | |||

| ● | |||

| ● | |||

| ● |

|

||

| ● |

|

||

| ● | |||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

● Iterating the results in a for loop.

● Using an operator such as ToList, ToArray, Single, or Count.

For example, if your storage account is named mystorageaccount, then the default endpoint for Blob storage is:

http://mystorageaccount.blob.core.windows.net

Blobs

Azure Storage supports three types of blobs:

| 2 |

|---|

You may only tier your object storage data to Hot, Cool, or Archive in Blob storage or General Purpose v2 (GPv2) accounts. General Purpose v1 (GPv1) accounts do not support tiering. However, customers can easily convert their existing GPv1 or Blob storage accounts to GPv2 accounts through a simple one-click process in the Azure portal. GPv2 provides a new pricing structure for blobs, files, and queues, and access to a variety of other new storage features as well. Furthermore, going forward some new features and prices cuts will only be offered in GPv2 accounts. Therefore, customers should evaluate using GPv2 accounts but only use them after reviewing the pricing for all services as some workloads can be more expensive on GPv2 than GPv1.

Blob storage and GPv2 accounts expose the Access Tier attribute at the account level, which allows you to specify the default storage tier as Hot or Cool for any blob in the storage account that does not have an explicit tier set at the object level. For objects with the tier set at the object level, the account tier will not apply. The Archive tier can only be applied at the object level. You can switch between these storage tiers at any time.

Cool access tier

Cool storage tier has lower storage costs and higher access costs compared to Hot storage. This tier is intended for data that will remain in the Cool tier for at least 30 days. Example usage scenarios for the Cool storage tier include:

Archive storage has the lowest storage cost and higher data retrieval costs compared to Hot and Cool storage. This tier is intended for data that can tolerate several hours of retrieval latency and will remain in the Archive tier for at least 180 days.

Develop Solutions that use Microsoft Azure Blob Storage 171

can be updated only by using operations appropriate for that blob type, i.e., writing a block or list of blocks to a block blob, appending blocks to a append blob, and writing pages to a page blob.

Block blobs let you upload large blobs efficiently. Block blobs are comprised of blocks, each of which is identified by a block ID. You create or modify a block blob by writing a set of blocks and committing them by their block IDs. Each block can be a different size, up to a maximum of 100 MB (4 MB for requests using REST versions before 2016-05-31), and a block blob can include up to 50,000 blocks. The maximum size of a block blob is therefore slightly more than 4.75 TB (100 MB X 50,000 blocks). For REST versions before 2016-05-31, the maximum size of a block blob is a little more than 195 GB (4 MB X 50,000 blocks). If you are writing a block blob that is no more than 256 MB (64 MB for requests using REST versions before 2016-05-31) in size, you can upload it in its entirety with a single write operation.

Storage clients default to a 128 MB maximum single blob upload, settable using the SingleBlobUp-loadThresholdInBytes property of the BlobRequestOptions object. When a block blob upload is larger than the value in this property, storage clients break the file into blocks. You can set the number of threads used to upload the blocks in parallel on a per-request basis using the ParallelOperation-ThreadCount property of the BlobRequestOptions object.

| ED | 172 |

|

|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

|

|

||

|

||

|

||

|

||

| An append blob is comprised of blocks and is optimized for append operations. When you modify an | ||

|

||

|

||

| serverless architectures. It does so without the need for complicated code or expensive and inefficient | ||

| Functions, Azure Logic Apps, or even to your own custom http listener, and you only pay for what you | ||

|

||

|

||

| but your scenario requires immediate responsiveness, event-based architecture can be especially effi- | ||

Blob storage accounts

Event Schema

Blob storage events contain all the information you need to respond to changes in your data. You can identify a Blob storage event because the eventType property starts with “Microsoft.Storage”. Additional information about the usage of Event Grid event properties is documented in Event Grid event schema3.

| 3 |

|---|

Develop Solutions that use Microsoft Azure Blob Storage 175

Signature, you can enable them to access resources in your storage account without sharing your account key with them.

r=b&sp=rw&sig=Z%2FRHIX5Xcg0Mq2rqI3OlWTjEg2tYkboXr1P9ZUXDtkk%3

|

||

|---|---|---|

|

||

|

||

|

||

|

||

|

||

|

||

| sig=Z%2FRHIX5Xcg0Mq2rqI3Ol-WTjEg2tYkboXr1P9ZUXDtkk%3D |

Valet key pattern that uses Shared Access Signatures

A common scenario where an SAS is useful is a service where users read and write their own data to your storage account. In a scenario where a storage account stores user data, there are two typical design patterns:

Develop Solutions that use Microsoft Azure Blob Storage 177

Names are case-insensitive. Note that metadata names preserve the case with which they were created, but are case-insensitive when set or read. If two or more metadata headers with the same name are submitted for a resource, the Blob service returns status code 400 (Bad Request).

The metadata consists of name/value pairs. The total size of all metadata pairs can be up to 8KB in size.

Retrieving Properties and Metadata

The GET and HEAD operations both retrieve metadata headers for the specified container or blob. These operations return headers only; they do not return a response body. The URI syntax for retrieving meta-data headers on a container is as follows:

blob?comp=metadata

Setting Metadata Headers

The URI syntax for setting metadata headers on a blob is as follows:

| ED | 178 |

|

|

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT | |||

| represented as standard HTTP headers; the difference between them is in the naming of the headers. | |||

|

|||

|

|||

| ● | |||

| ● | |||

|

|||

| ● |

|

||

| ● | |||

| ● | |||

| ● | |||

| ● | |||

| ● |

|

||

| ● |

|

||

| ● | |||

| ● | |||

| ● | |||

| The CloudStorageAccount class contains the CreateCloudBlobClient method that gives you | |||

|

|||

|

|||

|

|||

|

|||

| With a hydrated reference, you can perform actions such as fetching the properties (metadata) of the | |||

container.Properties

This class has properties that can be set to change the container, including (but not limited to) those in the following table.

| ED | 180 | ||

|---|---|---|---|

| MCT USE ONLY. STUDENT USE PROHIBIT |

|

||

|

|||

| A Shared Access Signature (SAS) is a URI that grants restricted access rights to containers, binary large | |||

|

|||

| ● | An ad hoc SAS. When you create an ad hoc SAS, the start time, expiration time, and permissions for | ||

|

|||

| ● | |||

| Access Signatures. When you associate an SAS with a stored access policy, the SAS inherits the | |||

|

|||

| Like with Azure Files, you can use AzCopy to copy blobs between storage containers. By default, does | |||

|

|||

| operation runs in the background by using spare bandwidth capacity that has no Service Level Agree- | |||

|

|||

|

|||

| structures such as relational data, JSON, spatial, and XML. What are the three deployment options for | |||

| ● | |||

| ● |

|

||

| ● |

|

||

|

|

|---|

> Click to see suggested answer